Looking at some articles right now, one could think that AI is omnipotent. However, it’s essential to remember that AI limitations exist, and there are many. Therefore, you should not expect it to be a universal cure for all problems. Unfortunately, it’s still brand-new technology, and its functionality has to be improved.

There are some incredible things you can achieve using AI. It’s also true that it can help your business save money by automating multiple processes and offering valuable analytics. However, some businesses take risks and apply AI in every situation. Such reckless use of tech can badly damage your business security and income.

According to the AI Incident Database, the number of AI misuse incidents in 2023 increased by 32.3% compared to the previous year. Nowadays, businesses must be realistic when considering the pros and cons of implementing AI. Devtorium Business Analysis and Information Security departments have the expertise to forecast probable risks caused by AI or other digital systems. In this blog post, our specialists will outline AI limitations and risks of implementing it without a system of fail-safes.

AI Limitations and Risks by Category

Data Dependency

Data is the main resource on which any AI system runs. Algorithms train on the provided data. Therefore, AI heavily relies on data quality, bias, and availability, which can impact performance and decision-making.

AI limitations caused by data:

- Creativity

While AI is good at generating content based on existing data, it struggles with original or innovative thinking. - Flexibility

AI has limitations in adapting to new or unexpected situations outside its training data. - Bias

Data bias can occur at various stages of the AI lifecycle. However, bias often originates from the data used to train and test the models.

Contextual Misunderstanding

What AI cannot do is understand the context. At least, this isn’t possible with the current level of technology development. AI’s lack of contextual understanding refers to its limits in interpreting information. In other words, AI can fail to realize societal context or grasp the subtleties of nuance.

AI limitations caused by context:

- Natural Language Processing (NLP)

While working on NLP tasks, like text analysis or translation, AI may have difficulty understanding language nuances such as idioms, slang, and dialects. - Visual recognition

AI algorithms can fail to recognize objects within their broader context in computer vision tasks. - Social interactions

AI-driven chatbots may struggle to catch the nuances of human conversation, including tone, sarcasm, or implied meanings. If you want to learn more about the capabilities of an AI-powered voice bot, click here.

Ethical Concerns

AI limitations is ethics are impossible to fathom because this technology doesn’t operate in a context that can be governed by ethics. Therefore, programming AI algorithms that could make ethical decisions is nearly impossible. The machine struggles to replicate feelings and emotions. It cannot make moral judgments in the same way humans can.

AI limitations caused by ethics:

- Lack of empathy

AI lacks emotional intelligence and cannot empathize with human emotions. Therefore, as an example, it cannot prioritize emotional well-being as a factor. - Cultural contexts

AI systems may struggle to understand human cultural diversity. This can lead to biased or culturally insensitive outcomes, like stereotypes. As a result, implementing AI in some areas might work to reinforce existing inequalities.

The Black Box Problem

The Black Box Problem refers to the opacity of AI decision-making processes. AI algorithms are so sophisticated that it is hard to realize how they arrive at their conclusions. Therefore, a human might not be able to trust them completely. As a result, implementing AI in any position where the machine can make decisions that impact human life becomes a huge risk.

AI limitations caused by transparency:

- Error correction

When AI systems make errors or produce unexpected outcomes, understanding why those errors occur is crucial. However, without a clear view of the internal workings of black-box AI models, diagnosing errors becomes much more difficult. - Trust

Users may find it challenging to rely on AI when they cannot understand how systems make decisions. The black box problem can be particularly concerning for critical applications such as healthcare or criminal justice.

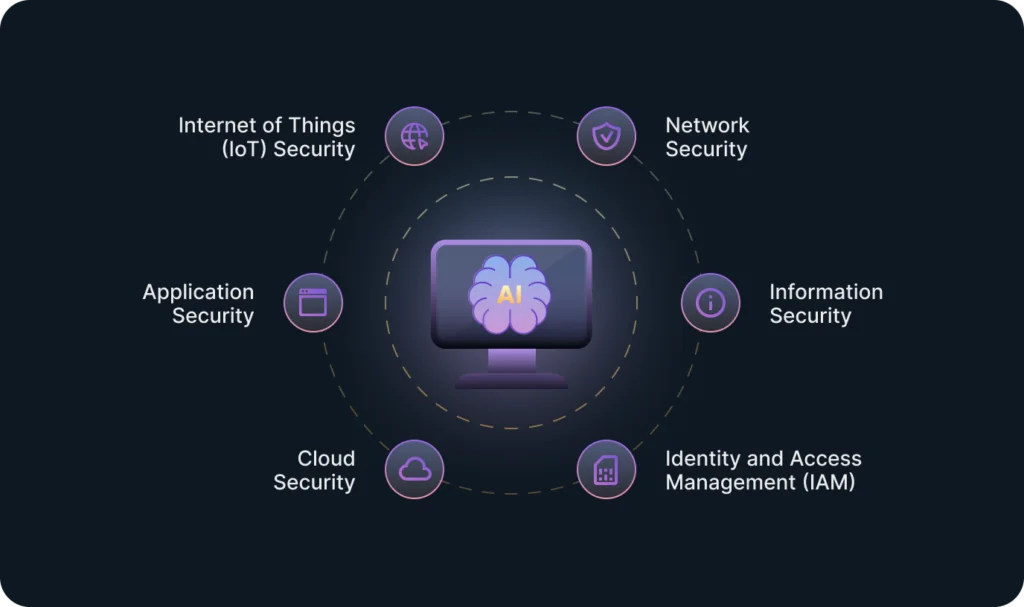

Privacy and Security

As AI cannot function without data, concerns arise regarding collecting, storing, and using personal data. AI technologies also introduce new cybersecurity risks. Malicious actors may exploit vulnerabilities in AI systems to launch attacks, which presents new threats to financial systems, critical infrastructure, and national security.

AI limitations in the security field:

- Tracking

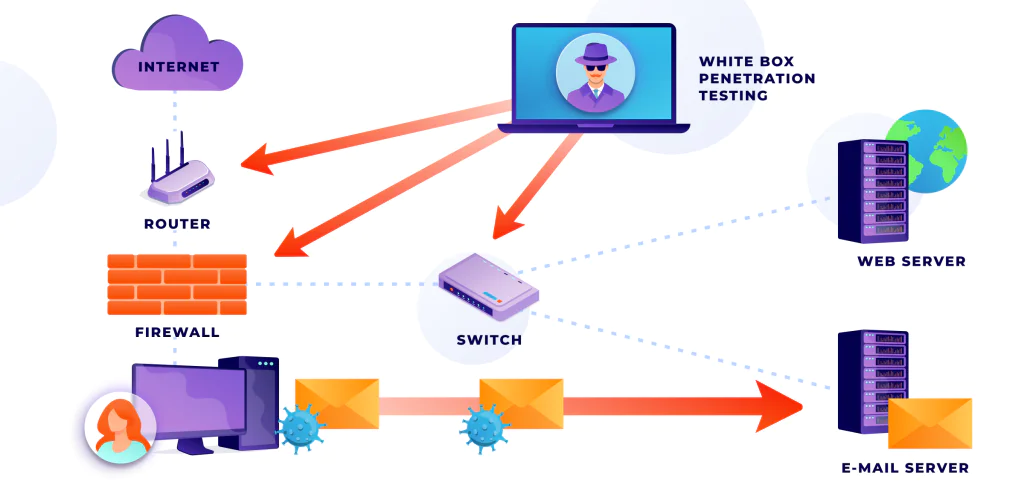

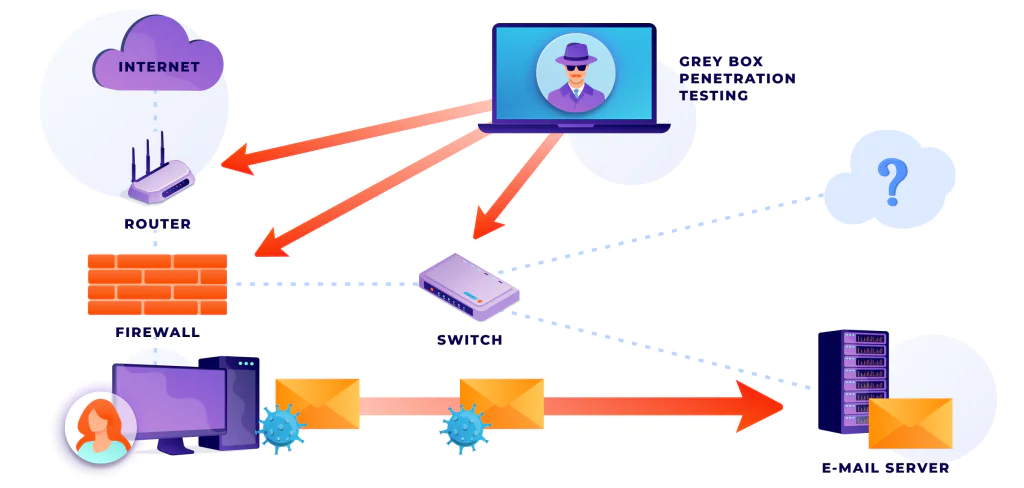

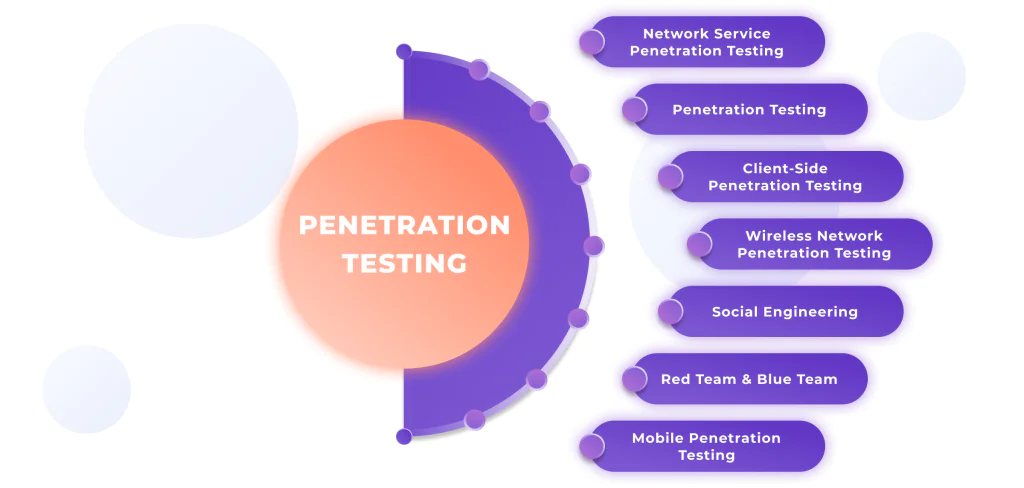

AI-powered surveillance technologies, such as facial recognition and biometric systems, threaten privacy by enabling constant monitoring and tracking of individuals without their consent. - Malicious use

AI technologies can be leveraged for malicious purposes, including generating convincing deepfake videos, launching sophisticated phishing attacks, and automating cyberattacks. - Personal data

AI systems may analyze and process personal data without adequate safeguards. This could lead to unauthorized access, identity theft, financial fraud, and other cases of data misuse.

Bottom Line: How to Avoid Reckless Risks and AI Limitations?

It’s impossible to avoid risks and AI limitations entirely with the current level of technology. Therefore, it’s imperative to address them responsibly to maximize the benefits of AI implementation. Devtorium professionals are always ready to help you understand risks and develop efficient, safe, and secure AI applications for your business. Contact our team for a free consultation on how to use AI to your best advantage.

To learn more about the Devtorium Team and the multiple capabilities of AI, check out our other articles: