Table of contents

Every time new technologies enter our lives, we must become pioneers and adapt to the new rules of the game. AI is not an exception. This innovation has already made its way into every sphere, from entertainment to science. Moreover, there are countless ways to use AI in real-life business. However, AI cannot remain unregulated without specific frameworks and rules. If such a powerful tool appears in the wrong hands, it can be used for selfish or harmful purposes.

The prospect of AI being used in deep fakes, fraud, and theft of personal data or intellectual property is not just concerning but an urgent issue. The Center for AI Crime reports a staggering 1,265% increase in phishing emails and a nearly 1,000% rise in credential phishing in the year following the launch of ChatGPT. This highlights the urgent need for AI regulation.

In response, significant regions such as Europe and the US have started developing principles regulating AI to protect their citizens, companies, and institutions while maintaining technological development and investment. The regulations contain critical nuances that must be considered when developing or implementing AI technologies. In this blog, we will explore and compare European and American AI regulations.

The EU AI Regulation: AI Act

The AI Act by the European Union is the first global and comprehensive legal framework for AI regulation. Basically, it is a set of measures aimed at ensuring the safety of AI systems in Europe. The European Parliament approved the AI Act in March 2024, followed by the EU Council – in May 2024. Although the act will fully take effect 24 months after publication, several sections will become applicable in December 2024, primarily focusing on privacy protection.

In general, this act is similar to the GDPR — the EU’s regulation on data privacy — in many respects. For example, both cover the same group of people — all residents within the EU. Moreover, even if a company or developer of an AI system is abroad, if their AI software is designed for the European market, they must comply with the AI Act. The regulation will also affect distributors of AI technologies in all 27 EU member states, regardless of where they are based.

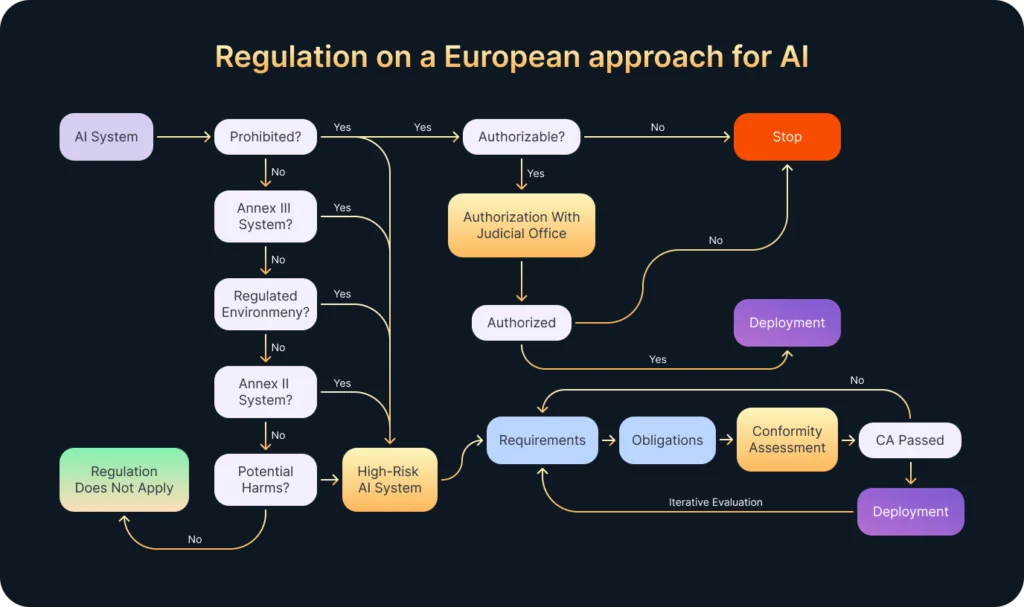

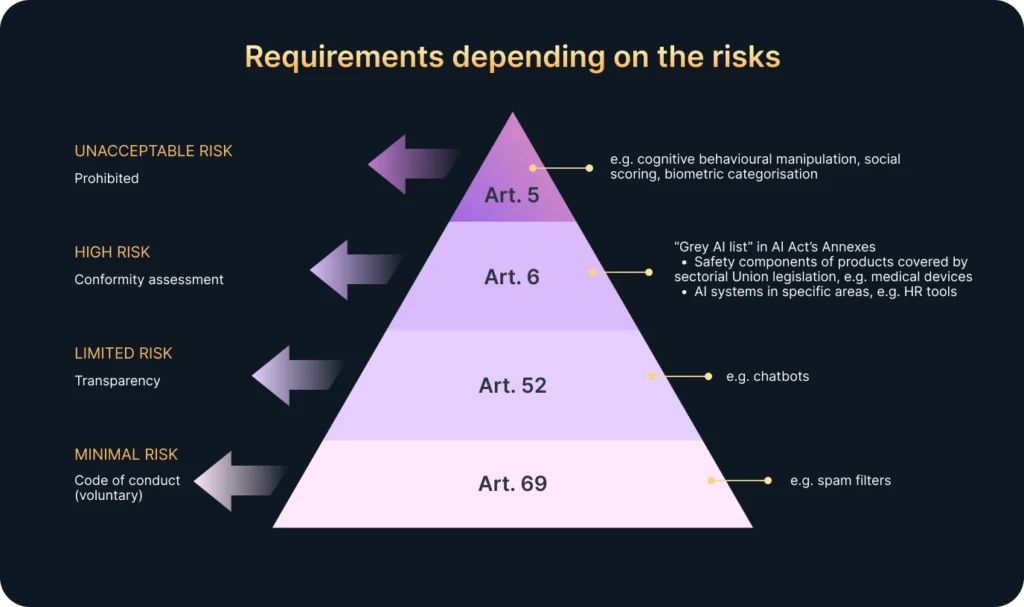

The risk-based approach of the AI Act is comparable to the GDPR’s. It divides AI systems into four risk categories:

- The minimal (or no) risk category is not regulated by the act (e.g., AI spam filters).

- Limited-risk AI systems must follow transparency obligations (e.g., users must be informed when interacting with AI chatbots).

- High-risk AI systems are strictly regulated by the act (e.g., using AI systems to enhance critical infrastructure).

- Unacceptable risk is prohibited (e.g., biometric categorization).

Non-compliance with certain AI practices can result in fines of up to 35 million EUR or 7% of a company’s annual turnover.

The US AI Regulation: Executive Order on AI

Although the United States leads the world in AI investments (61% of total global funding for AI start-ups goes to US companies), its process for creating AI legislation is slower and more disorganized than the EU’s. There is no approved Congress policy on AI systems regulation in the US for now. However, the White House issued an Executive Order (EO) on Safe, Secure, and Trustworthy Artificial Intelligence in October 2023. It sets federal guidelines and strategies for fairness, transparency, and accountability for AI systems. As with the AI Act, the EO aims to balance AI innovation with responsible development.

The AI Executive Order also focuses on guiding federal agencies in implementing AI systems and outlines a series of time-bound tasks for execution. It directs federal agencies to develop responsible AI governance frameworks. The National Institute of Standards and Technology (NIST) leads this effort by setting technical standards through its AI Risk Management Framework (AI RMF). This framework will shape future guidelines while aligning with industry-specific regulations. Federal funding priorities further emphasize AI research and development (R&D) to advance these initiatives.

The most important thing to mention about EO is that it does not have the same enforcement power as a law. Instead, EO should be viewed as a preparatory stage of AI regulation, and its recommendations should be gradually implemented if you plan to work in the US market. For example, any AI software development company should start conducting audits, assessments, and other practices to ensure their safe approach.

Comparison Table

Legal Force:

The AI Act will become a binding law across all EU member states once 24 months pass. After that, mandatory compliance will be required from everyone providing AI systems in this region. In contrast, the US Executive Order has less legal force. It sets essential guidelines for federal agencies, but it lacks the binding legal authority of a law passed by Congress. The EO’s enforcement is limited to federal government activities and impacts the private sector less. Thus, even a change of president can provoke future revocation.

Regulatory Approach:

The AI Act applies to all AI systems, categorizing them from unacceptable to minimal risk to ensure that every AI system across industries falls under specific regulations. The US OE focuses on sector-specific regulations, targeting high-impact industries like healthcare, finance, and defense. While this approach fosters innovation, it may lead to inconsistent risk management across sectors.

Data Privacy:

The AI Act uses practices from GDPR to enforce strict rules around data processing, privacy, and algorithm transparency. The US privacy regulations remain fragmented, with state-level laws such as the CCPA and BIPA applying at the state level but no federal AI-specific privacy law.

Ethical Guidelines:

The EU AI Act emphasizes ethical AI development, focusing on fairness, non-discrimination, and transparency. These principles are embedded within the legislation. The US Executive Order promotes similar values but through non-binding recommendations rather than legal mandates.

Support for Innovation:

The EU AI Act aims to balance strict regulation with promoting innovation, offering AI research and development incentives within an ethical framework. These actions help foster AI innovation while ensuring public safety. The US supports innovation through federal funding and AI research initiatives, but companies have more flexibility to self-regulate and innovate without the stringent compliance measures seen in the EU.

Conclusion: Challenges of Current AI Regulations

The EU and the US face global challenges in balancing AI regulation and innovation. The EU AI Act imposes numerous restrictions that limit the possibility of developing revolutionary AI software, while the US EO, although offering more flexibility and encouraging innovation, lacks comprehensive regulations. The evolving nature of AI technology makes it difficult for regulations to keep pace, and businesses must navigate complex compliance requirements across different regions. However, for developers working on projects, adhering to these regulations is crucial to avoid legal risks and ensure the ethical use of AI.

At Devtorium, we help businesses navigate these challenges by ensuring compliance with the necessary AI regulations. Our team can guarantee that your AI solutions meet both EU and US standards, allowing you to focus on innovation. For more details, contact us today and let Devtorium’s experts guide your AI development toward full regulatory compliance.

If you want to learn more about our other services, check out more articles on our website:

- Use of AI in Cybersecurity: Modern Way to Enhance Security Systems

- Will Small Business Be Affected by the AI Bubble Burst?

- How to Use AI in Small Business: Ideas and Practical Applications

- What AI Cannot Do: AI Limitations and Risks